How to ‘Add Mirror’ on a disk containing data when the option is grayed-out although you have another very similar disk available and empty? Check that your two disks are “Dynamic” and shrink the volume on the disk with data!

Click to Read More

I had a 2 TB disk with a unique volume (on one single partition), containing data.

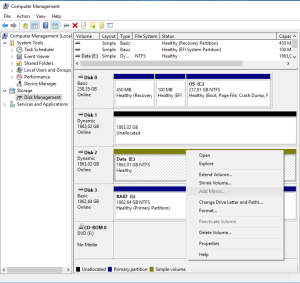

I did add a new 2 TB disk to be used as a mirror of the existing one. I went into the Disk Management tool to “add Mirror” but found that this option was not available. The menu was disabled:

First thing to check: the two disks to be used must be “Dynamic”. Right-click on “Disk x” to “Convert to Dynamic Disk …”.

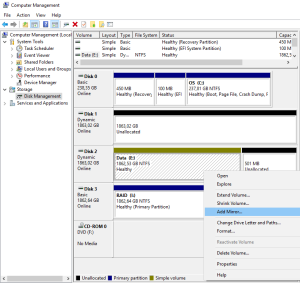

Next, to be able to create a mirror, the system needs some free space to create its own reserved partition on the disk. So, right-click on the Volume of the disk with data to “Shrink Volume…” (Try first with 1 Mb). Once the disk has been shrunk, the menu “Add Mirror…” becomes accessible.

Et voilà.

NB.:

- It does not matter if the disks are MBR or GPT.

- Once the volume shrunk, you will possibly have to reboot.

- If you encounter an issue while adding a mirror, do a “Check Disk” (Properties > Tools > Check).

- If you can really not get rid of the error “DiskPart has referenced an object which is not up-to-date.” although rebooting, do an “Extend Volume” of 1 MB! (Sound silly, I know…) and then “Add Mirror”.

- In my case, while the disks were re-syncing, I did remove the mirror, did an “Extend Volume” to reuse the whole free space, and was still able to do an “Add Mirror”.

- MS Partition & Volume Info here.

Mirror using DiskPart

- Run DiskPart in a cmd prompt started as Administrator

- list disk

- select disk x [x=disk containing the volume to be mirrored]

- list volume

- select volume x [x=volume to be mirrored]

- add disk x [x=disk to be used for mirroring]

![]()