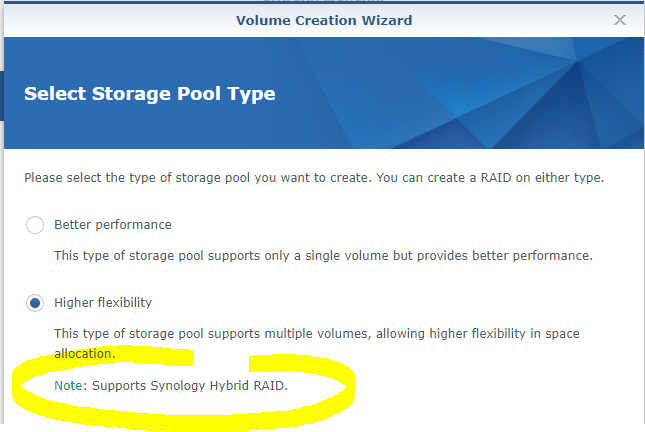

I wanted to try this since years… I finally did it successfully in a virtual Synology with an array of 5 disks in a single volume using btrfs (See here for VM and btfrs).

This is a summary of my attempts. It is based on various posts found on the web:

- https://superuser.com/questions/834100/shrink-raid-by-removing-a-disk

- https://unix.stackexchange.com/questions/67702/how-to-reduce-volume-group-size-in-lvm#67707

- https://blog.reboost.net/manually-removing-a-disk-from-a-synology-raid/

Notice that xfs File System only supports “extend” and not “reduce”. So, I only tried with etx4 and btrfs.

Although I used a DSM with a video station, a photo station, the web station and wordpress, this was not a “real case” (most of the data were still on the first sectors of the disks). So, I would really not recommend to do this on a real NAS !!!

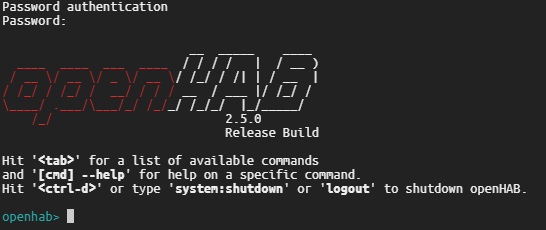

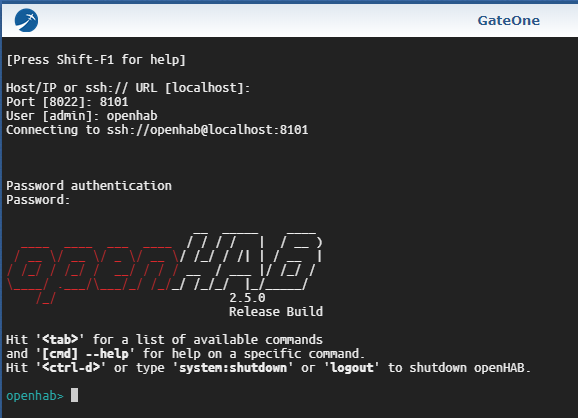

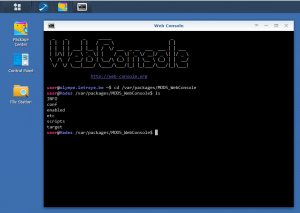

Open a SSH console and enter root mode (sudo -i) to execute the commands here after.

To identify which process is accessing a volume, we possibly need lsof (only if umount fails)

Install OPKG : See https://www.beatificabytes.be/use-opkg-instead-of-ipkg-on-synology/

Install lsof: /opt/bin/opkg install lsof

Layout of the Physical Volume/Volume Group/ Logical Volumes :

Find the Filesytem of /volume1:

df -h

Filesystem Size Used Avail Use% Mounted on

/dev/md0 2.3G 1016M 1.2G 46% /

none 2.0G 0 2.0G 0% /dev

/tmp 2.0G 536K 2.0G 1% /tmp

/run 2.0G 3.2M 2.0G 1% /run

/dev/shm 2.0G 4.0K 2.0G 1% /dev/shm

none 4.0K 0 4.0K 0% /sys/fs/cgroup

cgmfs 100K 0 100K 0% /run/cgmanager/fs

/dev/vg1000/lv 14G 3.2G 9.9G 25% /volume1

- Show Physical Volume: pvdisplay

- Show Volume Group: vgdisplay

- Show Logical Volume: lvdisplay

- Show Disks: fdisk -l

Check if one can umount the volume1 :

umount /dev/vg1000/lv

umount: /volume1: target is busy

(In some cases useful info about processes that

use the device is found by lsof(8) or fuser(1).)

Stop all services :

synopkg onoffall stop

/usr/syno/etc.defaults/rc.sysv/S80samba.sh stop

/usr/syno/etc.defaults/rc.sysv/S83nfsd.sh stop

/usr/syno/etc.defaults/rc.sysv/pgsql.sh stop

/usr/syno/etc.defaults/rc.sysv/synomount.sh stop

check which daemons are still using volume1 :

/opt/bin/lsof | grep volume1

COMMAND PID TID USER FD TYPE DEVICE SIZE/OFF NODE NAME

s2s_daemo 10868 root 8u REG 0,30 11264 608 /volume1/@S2S/event.sqlite

synologan 8368 root 3u REG 0,30 3072 654 /volume1/@database/synologan/alert.sqlite

synoindex 8570 root mem REG 0,28 7510 /volume1/@appstore/PhotoStation/usr/lib/libprotobuf-lite.so (path dev=0,30)

synoindex 8570 root mem REG 0,28 30143 /volume1/@appstore/VideoStation/lib/libdtvrpc.so (path dev=0,30)

lsof 8585 root txt REG 0,30 147560 32161 /volume1/@entware-ng/opt/bin/lsof

Kill those daemons :

initctl list | grep synoindex

synoindexcheckindexbit stop/waiting

synoindexd start/running, process 11993

killall synoindexd

initctl list | grep synologan

synologand start/running, process 8368

killall synologand

This is not working… synologand restart immediately ☹

chmod u-x /usr/syno/sbin/synologand

killall synologand

killall s2s_daemon

Shrink the ext2 or ext4 Filesystem :

Only do the steps here under if using ext2 or ext4 instead of btfrs

Resize the File System :

umount -d /dev/vg1000/lv

e2fsck -C 0 -f /dev/vg1000/lv

e2fsck 1.42.6 (21-Sep-2012)

Pass 1: Checking inodes, blocks, and sizes

Pass 2: Checking directory structure

Pass 3: Checking directory connectivity

/lost+found not found. Create<y>? yes

Pass 4: Checking reference counts

Pass 5: Checking group summary information

1.42.6-23739: ***** FILE SYSTEM WAS MODIFIED *****

1.42.6-23739: 30742/889440 files (0.7% non-contiguous), 734048/3553280 blocks

NB. If you want to stop e2fsck : killall -USR2 e2fsck

NB. If you want to get a progress from e2fsck : killall -USR1 e2fsck

resize2fs -p -M /dev/vg1000/lv

resize2fs 1.42.6 (21-Sep-2012)

Resizing the filesystem on /dev/vg1000/lv to 702838 (4k) blocks.

The filesystem on /dev/vg1000/lv is now 702838 blocks long.

If you see the error message here under, you possibly have another file system than ext2 or ext4 (E.g.: btfrs, …):

resize2fs: Bad magic number in super-block while trying to open /dev/vg1000/lv

Couldn't find valid filesystem superblock.

If you have this error message although having ext2 or ext4, try: lvm lvchange –refresh /dev/vg1000/lv

Check the results :

mount /dev/vg1000/lv /volume1

df -h

Filesystem Size Used Avail Use% Mounted on

/dev/md0 2.3G 968M 1.3G 44% /

none 2.0G 0 2.0G 0% /dev

/tmp 2.0G 512K 2.0G 1% /tmp

/run 2.0G 3.0M 2.0G 1% /run

/dev/shm 2.0G 4.0K 2.0G 1% /dev/shm

none 4.0K 0 4.0K 0% /sys/fs/cgroup

cgmfs 100K 0 100K 0% /run/cgmanager/fs

/dev/vg1000/lv 2.6G 2.5G 0 100% /volume1

Resize the Logical Volume :

Resize the logical volume a bit larger than the file system (See the outcome of df -h above)

umount /dev/vg1000/lv

lvm lvreduce -L 2.7G /dev/vg1000/lv

Rounding size to boundary between physical extents: 2.70 GiB

WARNING: Reducing active logical volume to 2.70 GiB

THIS MAY DESTROY YOUR DATA (filesystem etc.)

Do you really want to reduce volume_1? [y/n]: y

Size of logical volume vg1/volume_1 changed from 13.55 GiB (3470 extents) to 2.70 GiB (692 extents).

Logical volume volume_1 successfully resized.

NB: to get a progress, use : LV=/dev/vg1000/lv; echo `lvdisplay -v $LV | grep current | wc -l` `lvdisplay -v $LV | grep stale | wc -l` | awk ‘{printf ( “%3d percent Complete \n”, 100-$2/$1*100) }’

Shrink a BTRFS File System :

Only do the steps here under if using btfrs

Resize the File System :

btrfs filesystem resize 2.7G /volume1

You can restore max size if required: btrfs filesystem resize max /volume1

df -h

Filesystem Size Used Avail Use% Mounted on

/dev/vg1000/lv 2.6G 2.5G 1.4G 71% /volume1

Resize the Logical Volume:

umount -d /dev/vg1000/lv

lvm lvreduce -L 2.7G /dev/vg1000/lv

Next steps :

The steps here under are both for ext2/ext4 and btfrs

Resize the Physical Volume :

Look for the device and if blocks must be moved:

pvdisplay -C

PV VG Fmt Attr PSize PFree

/dev/md2 vg1 lvm2 a-- 13.57g 10.85g

pvs -v –segments /dev/md2

Using physical volume(s) on command line.

Wiping cache of LVM-capable devices

PV VG Fmt Attr PSize PFree Start SSize LV Start Type PE Ranges

/dev/md2 vg1000 lvm2 a-- 14.00g 440.00m 0 3473 lv 0 linear /dev/md2:0-3472

/dev/md2 vg1000 lvm2 a-- 14.00g 440.00m 3473 110 0 free

If there is a bloc trailing after the “free” part (I had not), use:

lvm pvmove –alloc anywhere /dev/md2:xxx-xxx

Resize the device a bit larger than the logical volume. If you don’t use a sufficient size, you will get an error message:

pvresize –setphysicalvolumesize 2.7G /dev/md2

/dev/md2: cannot resize to 691 extents as 695 are allocated.

0 physical volume(s) resized / 1 physical volume(s) not resized

pvresize –setphysicalvolumesize 2.8G /dev/md2

Physical volume "/dev/md2" changed

1 physical volume(s) resized / 0 physical volume(s) not resized

Resize the array to use less disks:

Reduce the array to use 3 disks (-n3)

mdadm –grow -n3 /dev/md2

mdadm: this change will reduce the size of the array.

use --grow --array-size first to truncate array.

e.g. mdadm --grow /dev/md2 --array-size 7114624

You have first to reduce the array size.

mdadm –grow /dev/md2 –array-size 7114624

mdadm –grow -n3 /dev/md2 –backup-file /root/mdam.md2.backup &

Monitor the progress of the resizing with:

cat /proc/mdstat

Personalities : [linear] [raid0] [raid1] [raid10] [raid6] [raid5] [raid4] [raidF1]md2 : active raid5 sdak5[4] sdaj5[3] sdai5[2] sdah5[1] sdag5[0]7114624 blocks super 1.2 level 5, 64k chunk, algorithm 2 [3/3] [UUU][======>..............] reshape = 31.7% (1129600/3557312) finish=0.3min speed=112960K/sec

md1 : active raid1 sdak2[4] sdaj2[3] sdai2[2] sdah2[1] sdag2[0]2097088 blocks [12/5] [UUUUU_______]

md0 : active raid1 sdak1[4] sdaj1[3] sdag1[0] sdah1[1] sdai1[2]2490176 blocks [12/5] [UUUUU_______]

unused devices: <none>

This can take a lot of time, but you can continue (I did wait 😉 )

Finalize

pvresize /dev/md2

Physical volume "/dev/md2" changed

1 physical volume(s) resized / 0 physical volume(s) not resized

lvextend -l 100%FREE /dev/vg1000/lv

Size of logical volume vg1/volume_1 changed from 2.70 GiB (692 extents) to 4.07 GiB (1041 extents).

Logical volume volume_1 successfully resized.

for btrfs:

btrfs filesystem resize max /volume1

for ext2/ext4:

e2fsck -f /dev/vg1000/lv

e2fsck 1.42.6 (21-Sep-2012)

Pass 1: Checking inodes, blocks, and sizes

Running additional passes to resolve blocks claimed by more than one inode...

Pass 1B: Rescanning for multiply-claimed blocks

Multiply-claimed block(s) in inode 13: 9221

Pass 1C: Scanning directories for inodes with multiply-claimed blocks

Pass 1D: Reconciling multiply-claimed blocks

(There are 1 inodes containing multiply-claimed blocks.)

File /@tmp (inode #13, mod time Sat May 23 23:00:14 2020)

has 1 multiply-claimed block(s), shared with 0 file(s):

Multiply-claimed blocks already reassigned or cloned.

Pass 2: Checking directory structure

Pass 3: Checking directory connectivity

Pass 4: Checking reference counts

Pass 5: Checking group summary information

1.42.6-23739: 30742/179520 files (0.7% non-contiguous), 685397/720896 blocks

Hope that no nodes are corrupted. Otherwise… well… accept to fix them.

resize2fs /dev/vg1000/lv

resize2fs 1.42.6 (21-Sep-2012)

Resizing the filesystem on /dev/vg1/volume_1 to 1065984 (4k) blocks.

The filesystem on /dev/vg1/volume_1 is now 1065984 blocks long.

mount /dev/vg1000/lv /volume1

Restart services:

/usr/syno/etc.defaults/rc.sysv/S80samba.sh start

/usr/syno/etc.defaults/rc.sysv/S83nfsd.sh start

/usr/syno/etc.defaults/rc.sysv/pgsql.sh start

/usr/syno/etc.defaults/rc.sysv/synomount.sh start

synopkg onoffall start

Remove the new spare disks from the volume

mdadm –detail –scan

ARRAY /dev/md0 metadata=0.90 UUID=3b122d95:7efea8ff:3017a5a8:c86610be

ARRAY /dev/md1 metadata=0.90 UUID=bd288153:d00708bf:3017a5a8:c86610be

ARRAY /dev/md2 metadata=1.2 spares=2 name=DS3617_62:2 UUID=875ad2d6:956306b7:8c7ba96b:4287f6e6

mdadm –detail /dev/md2

/dev/md2:

Version : 1.2

Creation Time : Sat May 23 14:00:02 2020

Raid Level : raid5

Array Size : 7114624 (6.79 GiB 7.29 GB)

Used Dev Size : 3557312 (3.39 GiB 3.64 GB)

Raid Devices : 3

Total Devices : 5

Persistence : Superblock is persistent

Update Time : Sat May 23 18:54:30 2020

State : clean

Active Devices : 3

Working Devices : 5

Failed Devices : 0

Spare Devices : 2

Layout : left-symmetric

Chunk Size : 64K

Name : DS3617_62:2 (local to host DS3617_62)

UUID : 875ad2d6:956306b7:8c7ba96b:4287f6e6

Events : 56

Number Major Minor RaidDevice State

0 8 5 0 active sync /dev/sda5

1 8 37 1 active sync /dev/sdc5

2 8 53 2 active sync /dev/sdd5

3 8 69 - spare /dev/sde5

4 8 85 - spare /dev/sdf5

Here above, we see that sde5 and sdf5 are unused

mdadm –fail /dev/md2 /dev/sde5

mdadm: set /dev/sde5 faulty in /dev/md2

mdadm –fail /dev/md2 /dev/sdf5

mdadm: set /dev/sdf5 faulty in /dev/md2

mdadm –remove /dev/md2 /dev/sde5

mdadm: hot removed /dev/sde5 from /dev/md2

mdadm –remove /dev/md2 /dev/sdf5

mdadm: hot removed /dev/sdf5 from /dev/md2

Et voilà.

![]()